A/B Testing in Mobile Marketing: The Key to Performance

Introduction

In the world of digital marketing, A/B testing is an essential tool for optimizing campaign performance. Whether you’re a brand developing mobile apps or a business seeking to improve ROI, A/B testing enables data-driven decision-making. But what exactly is A/B testing, and how can you apply it effectively in mobile marketing?

A/B Testing: The Key to Campaign Optimization

An A/B test compares two versions of an element (such as an ad creative, landing page, or message) to determine which performs better.

The process involves audience segmentation: one group sees version A, while another sees version B. The results (e.g., click-through rates, conversions) are analyzed to identify the most effective version.

A/B Testing: A Must-Have for Excelling in Mobile Marketing

In mobile marketing, every decision counts. With increased competition in app stores and rising acquisition costs, optimizing ad performance and user experience is crucial. Here’s why:

- Maximizing ROI: Testing different elements allows you to invest in what truly works. For instance, a study by Adjust found that campaigns optimized through A/B testing increase conversion rates by an average of 30%.

- Adapting to User Behavior: Behaviors vary across markets, age groups, and devices. For example, a mobile gaming company saw a 25% increase in clicks after tailoring ads for Android users versus iOS.

- Continuous Improvement: A/B testing offers an iterative process to refine strategies. For example, a major retail player reduced its CPI by 15% by testing campaigns on local audiences.

Mobile Marketing: Key Elements to Test for Maximized Results

In mobile marketing, every aspect of a campaign can be optimized. Here are key elements to test, with concrete examples:

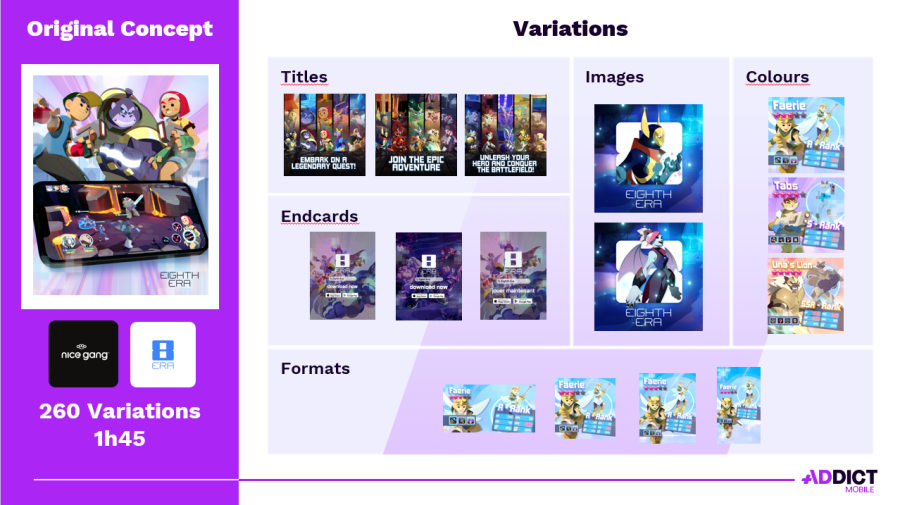

- Visual Creatives: Test variations of images or videos. For instance, a fitness app campaign might compare a video of an intense workout with one showing user testimonials.

- Ad Text: Experiment with different headlines and calls-to-action. Example: “Start Your Transformation Today!” versus “Unlock Your Potential with Our App!”

- Audiences: Segment targets by demographics or interests. For example, a gaming app could test audiences of gamers versus tech enthusiasts.

- Countries and Regions: Tailor campaigns to local specifics. An ad for a delivery app might feature regional dishes in visuals for different locations.

- Ad Formats: Compare the effectiveness of banners, interstitials, or rewarded videos based on user preferences.

- Landing Pages: Modify structure or interactive elements. For instance, a page with a prominent “Download” button versus one with more details before the call-to-action.

Discover our approach to test your creatives efficiently!

Successful A/B Testing: Best Practices to Follow

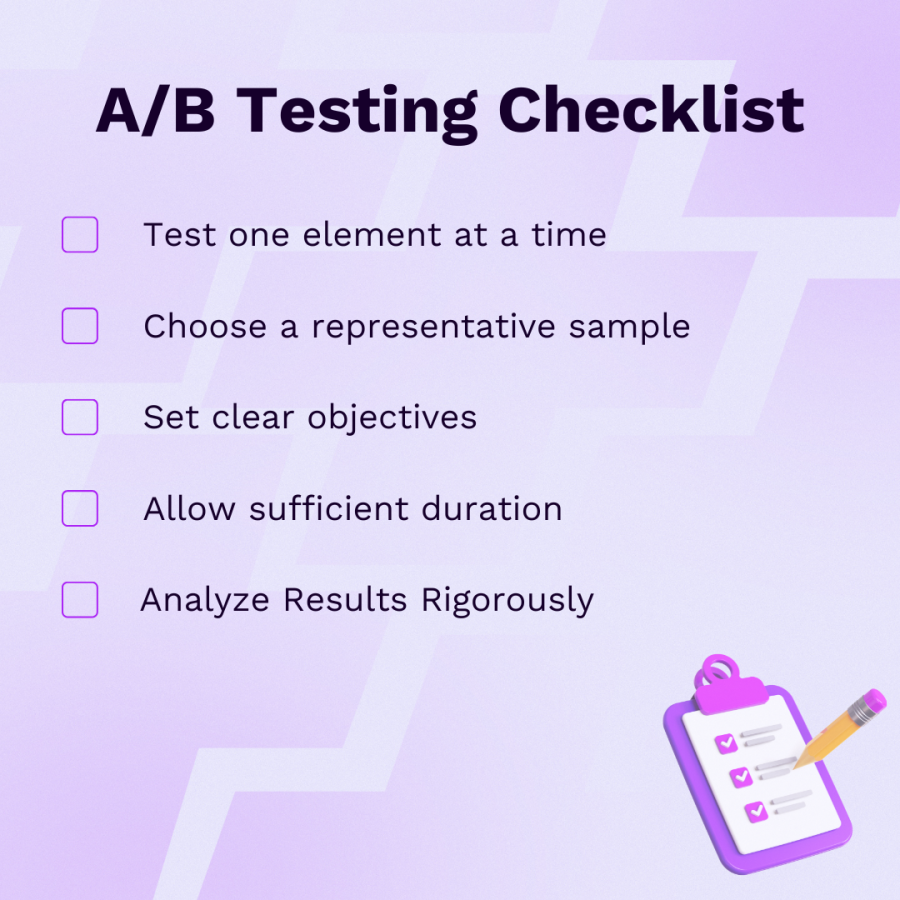

To maximize your test results, follow these best practices:

1. Test One Element at a Time: This ensures reliable results. For example, test only the headline before changing the color of a button.

2. Choose a Representative Sample: Ensure groups A and B are comparable in demographics and behavior. An overly specific audience may skew results.

3. Set Clear Objectives: What KPI do you aim to improve (CTR, CVR, ARPU)? For instance, an installation campaign might target a CPI below €2.

4. Allow Sufficient Duration: Let the test run for at least a week to gather meaningful data unless you have a high user volume.

5. Analyze Results Rigorously: Use analysis tools to avoid bias and statistically validate results. Avoid conclusions based on minor differences or short periods.

Common Mistakes to Avoid:

- Changing multiple variables simultaneously: This makes it impossible to identify the element with the most impact.

- Stopping the test too early: You may miss long-term trends.

- Ignoring segmentation biases: Groups A and B must be comparable for valid results.

Measuring Impact: The Best Tools and KPIs for A/B Tests

To evaluate and learn from your tests, it’s essential to select the right tools and focus on relevant KPIs. Here’s a detailed approach:

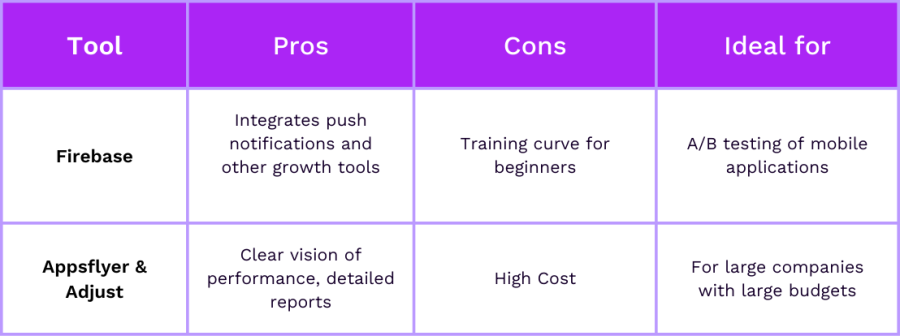

Analysis Tools: Advantages and Disadvantages

- Firebase: Ideal for A/B testing on mobile applications. Advantage: integrates push notifications and other growth tools. Disadvantage: learning curve for beginners.

- Adjust and Appsflyer: These platforms offer a clear view of mobile campaign performance. Their reports are detailed, but their cost can be an obstacle for small businesses.

KPIs to Monitor and Their Utility

- Click-Through Rate (CTR): Measures ad attractiveness.

Example: A variation with the CTA “Discover Now” achieved a 12% CTR compared to 9% for “Learn More.”

- Cost Per Install (CPI): Evaluates budget efficiency.

Example: An A/B-tested campaign reduced CPI from €1.50 to €1.20.

- Conversion Rate (CVR): Measures the percentage of users taking a specific action.

Example: A simplified sign-up page improved CVR from 20% to 35%.

- Retention Rate: Shows user loyalty over time.

Example: A change in onboarding improved D7 retention by 5%.

- Lifetime Value (LTV): Measures overall profitability.

Example: A segmented audience generated 15% higher LTV. Example: A segmented audience generated 15% higher LTV.

For valid results, perform in-depth statistical analyses using tools like Tableau or Excel, and avoid relying solely on first impressions.ofondies en utilisant des outils comme Tableau ou Excel, et ne vous fiez pas uniquement aux premières impressions.

Do not hesitate to contact with our teams

Addict can support you to improve your performance.

Conclusion

A/B testing is more than just a testing method; it’s a continuous optimization strategy essential for mobile marketers. By regularly testing your creatives, texts, and parameters, you improve not only your campaigns but also your understanding of user behavior. Adopt a structured, data-driven approach to turn your tests into measurable successes.